A Forward-Deployed Case Study in AI Prototype Validation and Path to Production

Synopsis

A comprehensive case study demonstrating 0-to-1 product development. I was tasked with leading an AI-native initiative spanning both product development and forward deployment responsibilities, building and validating a proof-of-concept prototype with Model Context Protocol integration within two weeks. Through customer testing and feedback across four enterprise customers, I believe we validated the product direction. The prototype showcased AI-powered natural language querying of identity datasets, achieved production-ready performance metrics, and confirmed strong market demand for AI-native identity services.

The Context

I was tasked to take on the role of "forward-deployed engineer", a position that would combine product discovery with rapid prototyping and execution. This was exactly the type of work I wanted to be doing, it certainly wasn't the type of environment or circumstances I wanted to be doing it in.

The mandate from leadership was roughly: discover what customers actually needed in an AI-powered identity platform, build something that proved the concept worked, and do it fast enough to matter.

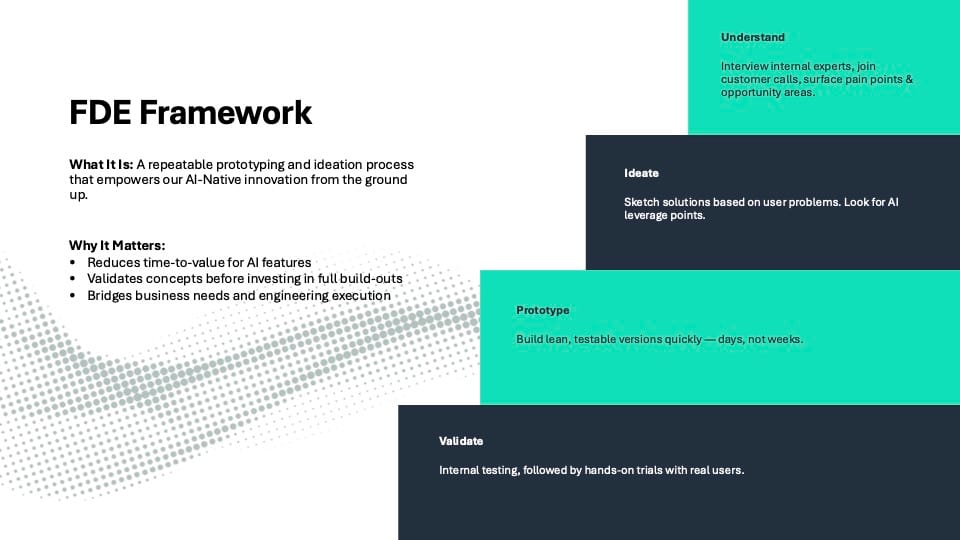

Methodology: Adapting the GV Design Sprint

When faced with vague requirements like "make something that lets us talk to our data" and "build an AI thing," I knew I needed a structured approach that could cut through ambiguity and deliver clarity quickly. The Google Ventures Design Sprint methodology provided the ideal framework, but it needed significant adaptation for our specific context.

Why the Design Sprint Approach

The traditional Design Sprint's strength lies in its ability to rapidly move from problem identification to tested solutions. However, our situation required modifications:

- Limited team: Rather than a full cross-functional team, I was working primarily solo with targeted input from key stakeholders

- Technical validation: Unlike typical design sprints focused on user experience, we needed to prove technical feasibility alongside user value

Key Modifications for Our Context

I distilled the process into four core phases that could be executed efficiently:

- Understand: Rapid stakeholder interviews to identify pain points and opportunities

- Ideate: Generate AI-powered solutions based on gathered insights

- Prototype: Build working proof-of-concept as quickly as possible

- Validate: Test internally first, then with customers

The Two-Slide Framework

When I was asked to give an outline of the process. I created a simple two-slide summary of the framework.

This framework, while comedically terse became crucial for maintaining clarity and buy-in.

The adapted methodology needed to account for our resource constraints while still maintaining the rigor necessary to make sound product decisions. Speed was essential, but not at the expense of validation.

Discovery Phase: From Vague Requirements to Customer Reality

The discovery phase began with a stark reality: the initial requirements were deliberately vague. "Make something that lets us talk to our data, build an 'AI thing'" was the extent of the brief. This ambiguity, while initially frustrating, ended up providing the freedom to explore what customers truly needed rather than being constrained by preconceived solutions.

Internal Stakeholder Interviews

I started by conducting rapid interviews with key internal stakeholders across product, engineering, and leadership:

- Services Team: Understanding existing customer pain points and feature requests from the PoV of our in house identity experts, who have made a career providing services for enterprises.

- Engineering team: Assessing data availability, technical constraints, and infrastructure capabilities

- Leadership: Clarifying business objectives and success criteria

These conversations revealed critical insights about our data architecture, the quality of our existing datasets, and the technical feasibility of various AI approaches. Most importantly, they helped me understand what we could realistically deliver within our timeline and resource constraints.

Customer Validation

While I had gathered great data points from our experts on the services team. The real breakthrough came when I secured four strategic customer calls that provided direct insight into market demand:

Bryan: revealed he had already achieved measurable ROI using AI tools. He'd used Google Gemini to analyze 1,100 support tickets and discovered that approximately 30% were access requests, an insight that helped him secure additional IT headcount. This validated that customers were already seeing value from AI analysis of identity data, even with basic strung together tools.

Robert: identified an industry-wide crisis that perfectly aligned with our AI vision. "We collectively as an industry have not cracked the nut on how we tell the access management risk story to a board," he explained. This revealed a universal CISO pain point around executive reporting that AI could potentially solve.

Todd: demonstrated the most advanced AI adoption, running local Llama models with Claude Desktop and expressing strong interest in MCP integration for quarterly executive reporting. This showed that technically sophisticated customers were ready for advanced AI integrations.

Adam & Aubrey: The team confirmed the challenges of large enterprise data quality and the potential value of AI-powered compliance checking across hybrid environments.

Key Insights That Shaped Product Direction

These conversations crystallized four critical insights:

- Universal demand for AI-driven data normalization: Every customer struggled with making sense of data across different platforms

- Service account management pain: All customers mentioned challenges with orphaned or unmanaged service accounts

- Natural language querying as core requirement: Customers wanted to ask questions in plain English rather than learning complex query languages or use existing, brittle, BI tooling.

- Executive reporting as critical CISO need: There was a clear gap in tools that could translate technical identity data into board-ready reports.

The discovery phase transformed vague internal requirements into concrete customer-validated use cases, providing the foundation for a targeted prototype that could deliver real value.

Building the AI Prototype: From Concept to Functional Software

With clear customer insights in hand, I faced the challenge of translating market need into working technology. The core innovation would be AI-powered natural language querying of enterprise identity datasets, something that sounded simple but required solving several complex technical challenges.

The Core Innovation

The prototype centered on enabling AI agents to directly query identity data through natural language. Instead of requiring users to learn complex query languages or navigate multiple dashboards, they could simply ask questions like "Who has been recently terminated and still has access?" and receive instant, structured responses.

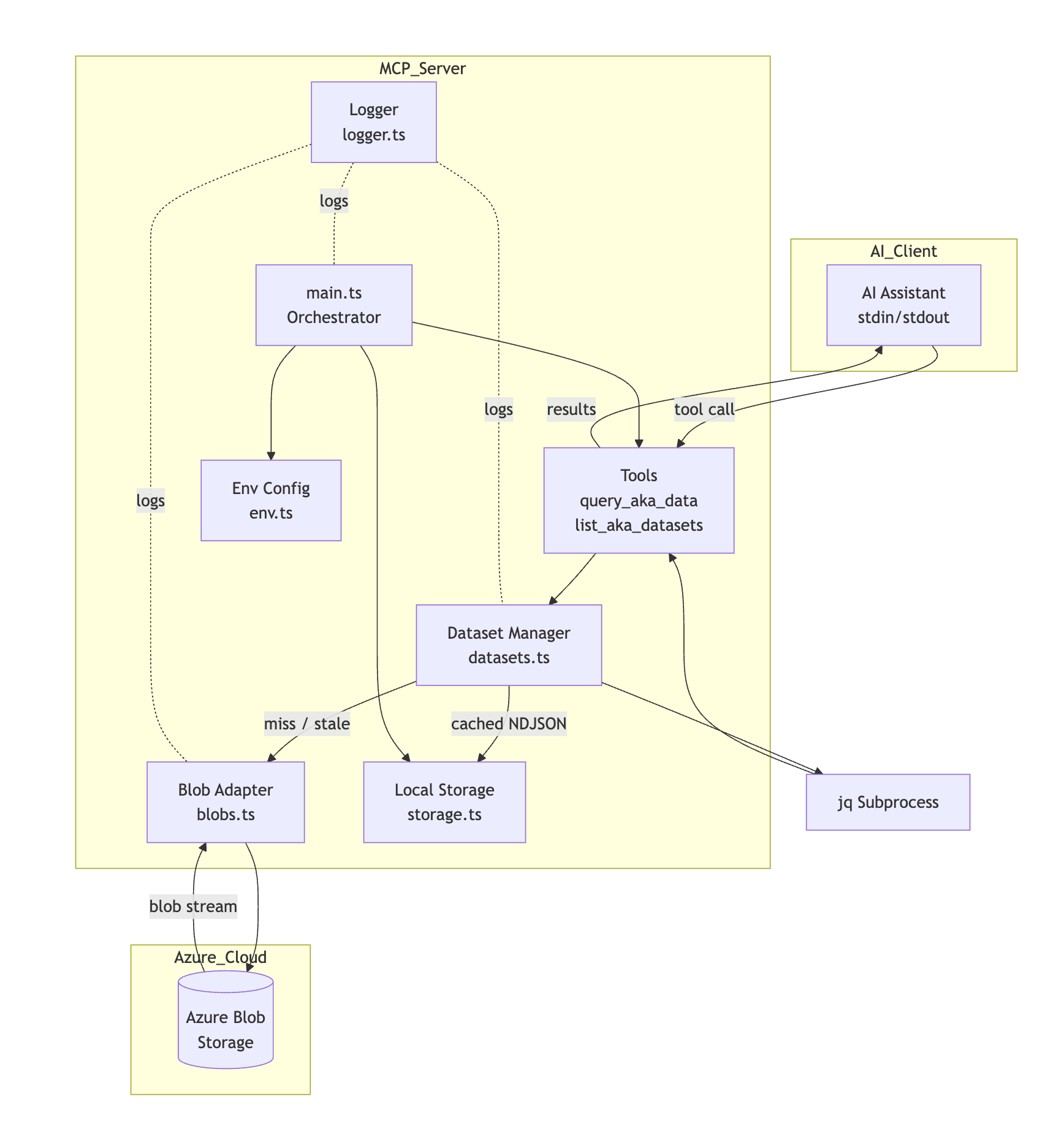

Technical Architecture: The MCP Server

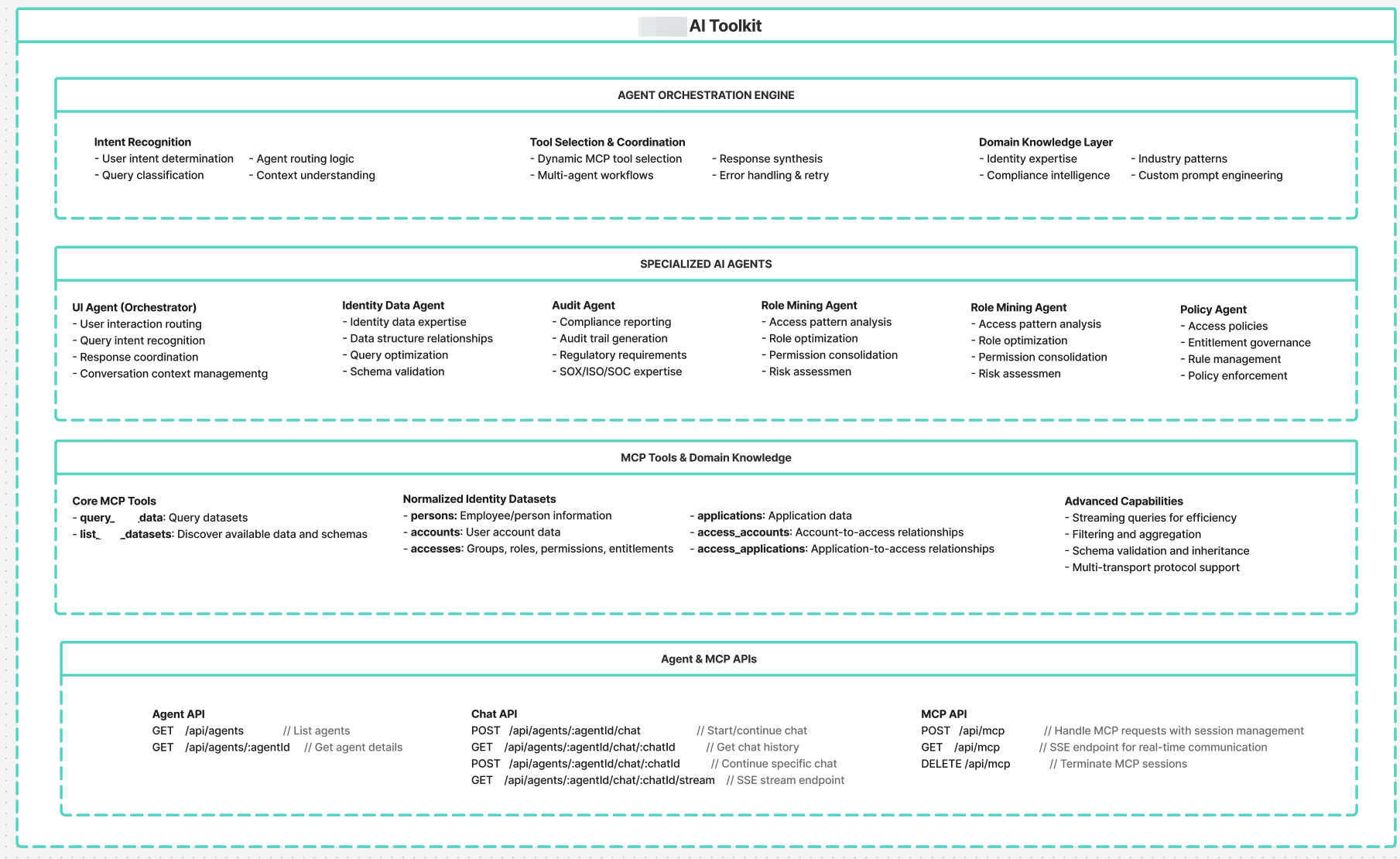

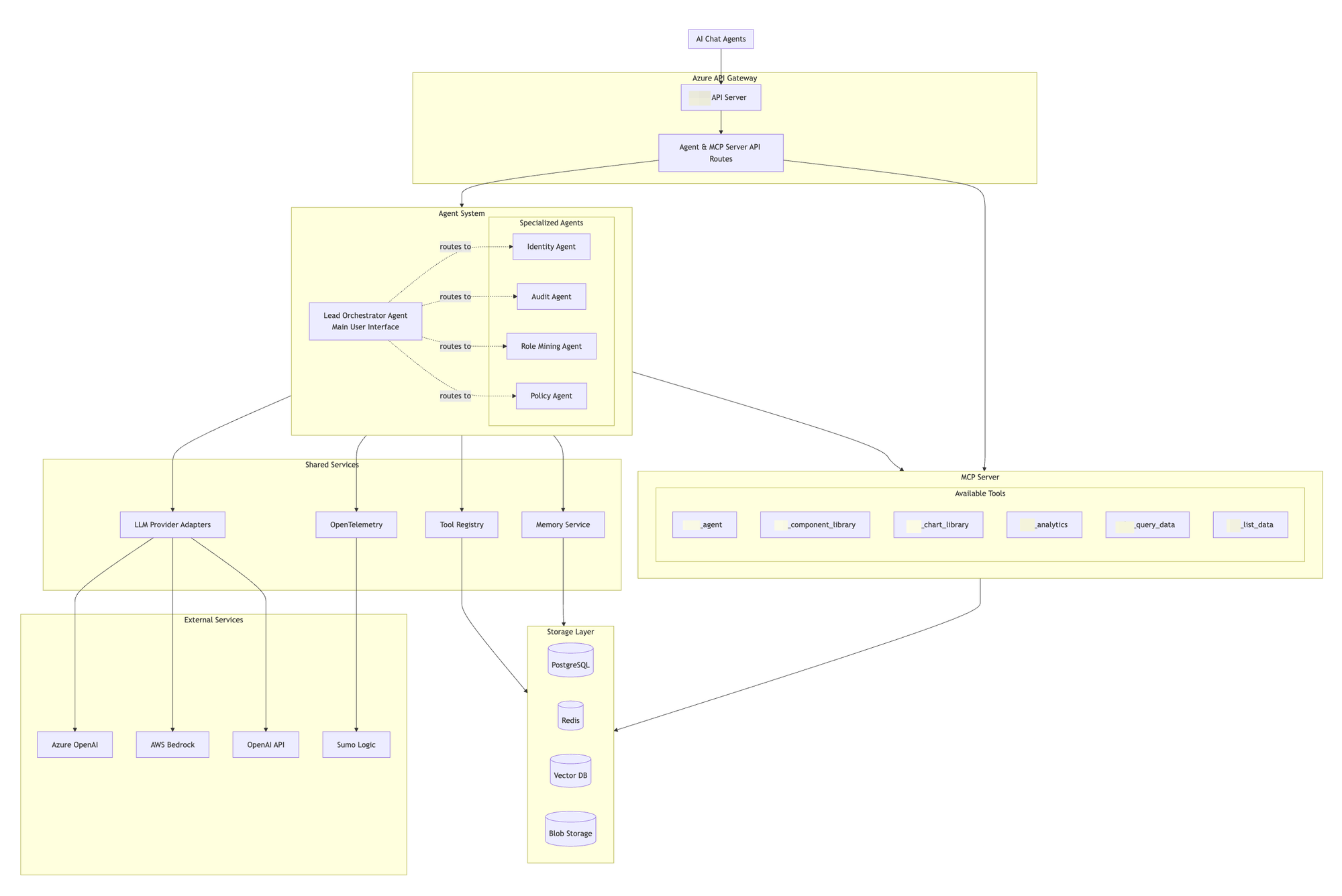

The heart of the solution was a Model Context Protocol (MCP) server that acted as an intelligent bridge between AI agents and our identity datasets. The prototype included several sophisticated AI-powered features:

Query Translation Engine: The system could interpret natural language questions and convert them into precise jq queries against our structured datasets. For example, "Who is terminated and has any access?" would be translated into a complex filter operation that identified terminated users with remaining application accounts with access (sometimes even privileged access).

Streaming Data Processing: Using NDJSON (newline-delimited JSON) format, the system could stream large datasets efficiently. This design choice, made in partnership with our internal engineering team, ensured fast query responses without overwhelming system resources.

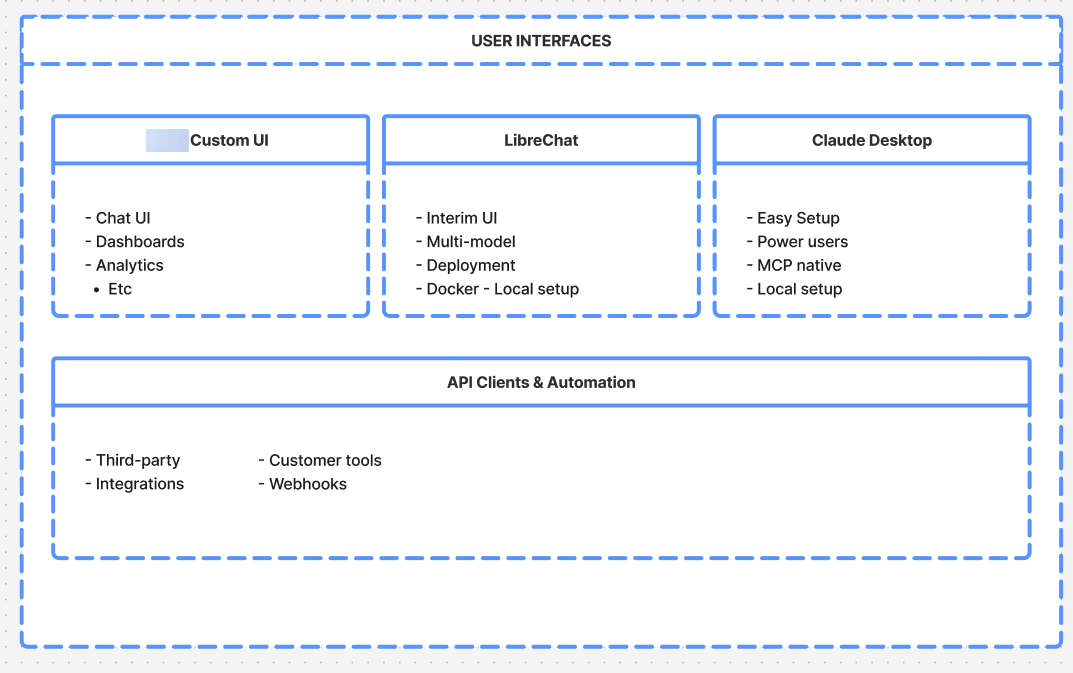

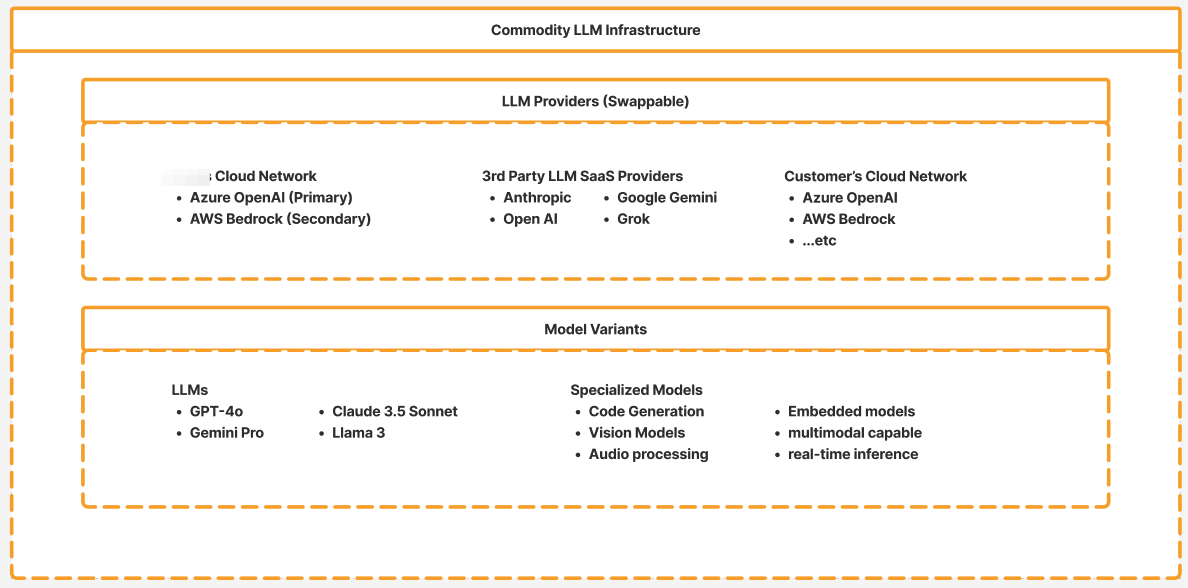

Multi-Modal AI Support: The architecture was designed to work with various AI interfaces, from Claude Desktop to LibreChat, ensuring flexibility in how customers accessed the capabilities.

Key AI Capabilities Delivered

The prototype successfully demonstrated several core use cases, but for definition of initial success, I collaborated with our identity services experts to come up with two initial questions.

- Compliance risk identification: "Who is terminated and has any access?"

- → Automated identification of potential security risks

- Security anomaly detection: "What unowned accounts have been used in the past week?"

- → Proactive identification of suspicious activity

Engineering Choices Under Constraints

Working under an extreme timeline (at this point, I had ~3 days to deliver a functional software prototype), I made several critical engineering decisions:

Data Format Selection: Partnered with the internal engineering team to switch existing experimental database exports from CSV to NDJSON format, enabling the ability to use jq for the underlying querying engine and provide efficient streaming without requiring direct access to production databases.

Security-First Design: Ensured all data access went through secure, controlled channels rather than direct database connections, maintaining security standards even under rapid development constraints.

Modular Architecture: Built the system with clear separation of concerns, making it easier to maintain and extend even though initial development was solo.

The engineering phase demonstrated that sophisticated AI capabilities could be delivered quickly when the right architectural decisions were made upfront, even under significant resource and timeline constraints.

5. AI Prototype Validation: Proving the Product Direction

With a working prototype in hand, the validation phase became critical for proving that our AI-powered approach could deliver real value to customers. This phase required both internal testing to ensure technical reliability and external validation to confirm market demand.

Internal Validation First

Before engaging customers, I partnered with our identity experts from our internal services team to thoroughly test the prototype. This collaboration served multiple purposes:

- Technical validation: Ensuring the AI queries produced accurate results against our actual datasets

- Use case development: Generating realistic scenarios that customers might encounter

- Dashboard proof-of-concept: Creating visual reports of AI-generated insights

- Performance testing: Validating that response times met user expectations

This internal testing phase revealed both the capabilities and limitations of our approach, allowing us to refine the prototype before customer exposure.

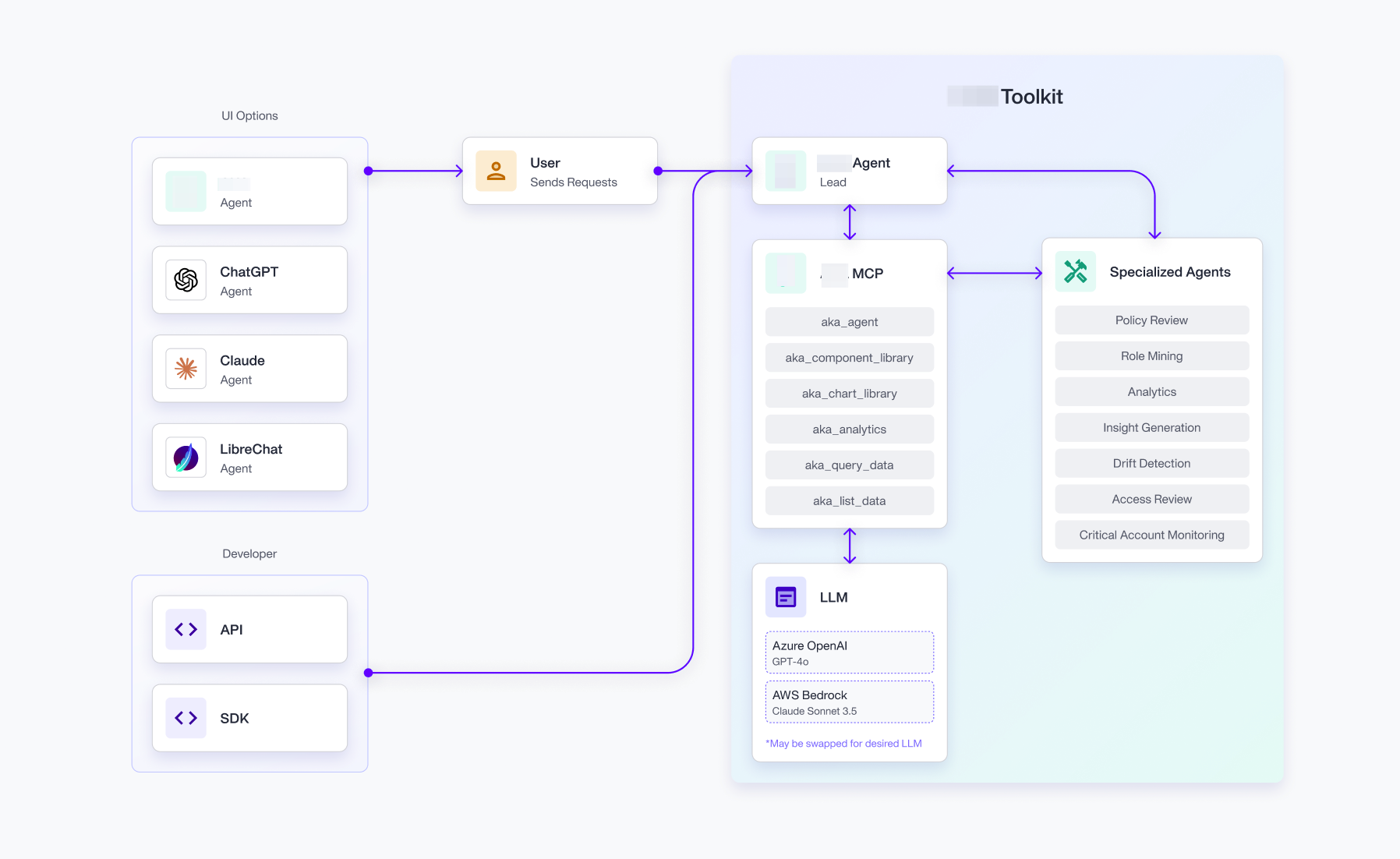

AI Platform Testing

The prototype was designed to work across multiple AI interfaces, and we successfully tested integration with:

- Claude Desktop: For users who were already using SaaS llm providers in their daily workflows.

- LibreChat: For users who were using on premise AI solutions, to avoid sending identity data sets to 3rd party SaaS providers.

This approach validated that our MCP architecture could adapt to different customer preferences and technical environments and scale/grow with our product developments.

Validated Use Cases

Through testing, we confirmed several core use cases that demonstrated value:

Natural Language Identity Data Exploration: Users could ask complex questions about identity relationships without needing to understand underlying data structures or query languages.

Automated Compliance Risk Detection: The system could proactively identify potential security risks, such as terminated users with remaining access privileges or unowned accounts with recent activity.

AI-Generated Executive Reporting: The prototype could transform technical identity data into executive-friendly summaries and reports, addressing the CISO reporting gap identified by customer interviews.

Service Account Management Automation: The system could analyze patterns in service account usage and identify optimization opportunities, addressing a universal customer pain point.

Technical Performance Validation

The prototype demonstrated strong technical performance under real-world testing conditions:

- Query Performance: 29 AI queries executed over 18 minutes across several llm chat conversations during prototype testing phases.

- Reliability: 100% uptime during all user interactions

- Success Rate: 86% of queries completed successfully, with failures primarily due to invalid jq syntax rather than system issues, however all failures were immediately corrected by the llms.

- Response Times: Consistent sub-500ms response times for most queries

Result Artifact

Below is a screenshot of an artifact generated during prototype testing with Claude.

Product Direction Confirmation

The validation phase provided clear confirmation that our AI-powered identity analysis approach addressed real market needs. Customers not only understood the value proposition but were actively interested in adopting the technology. The combination of technical performance and customer enthusiasm validated that we were building something the market actually wanted.

Most importantly, the validation revealed that customers were already attempting to solve these problems with basic AI tools, confirming that our more sophisticated, purpose-built platform could capture significant market demand.

When we presented the MCP prototype to the customers, all responded positively, resulting in scheduled engagements to onboard and adopt our AI solutions as early adopters.

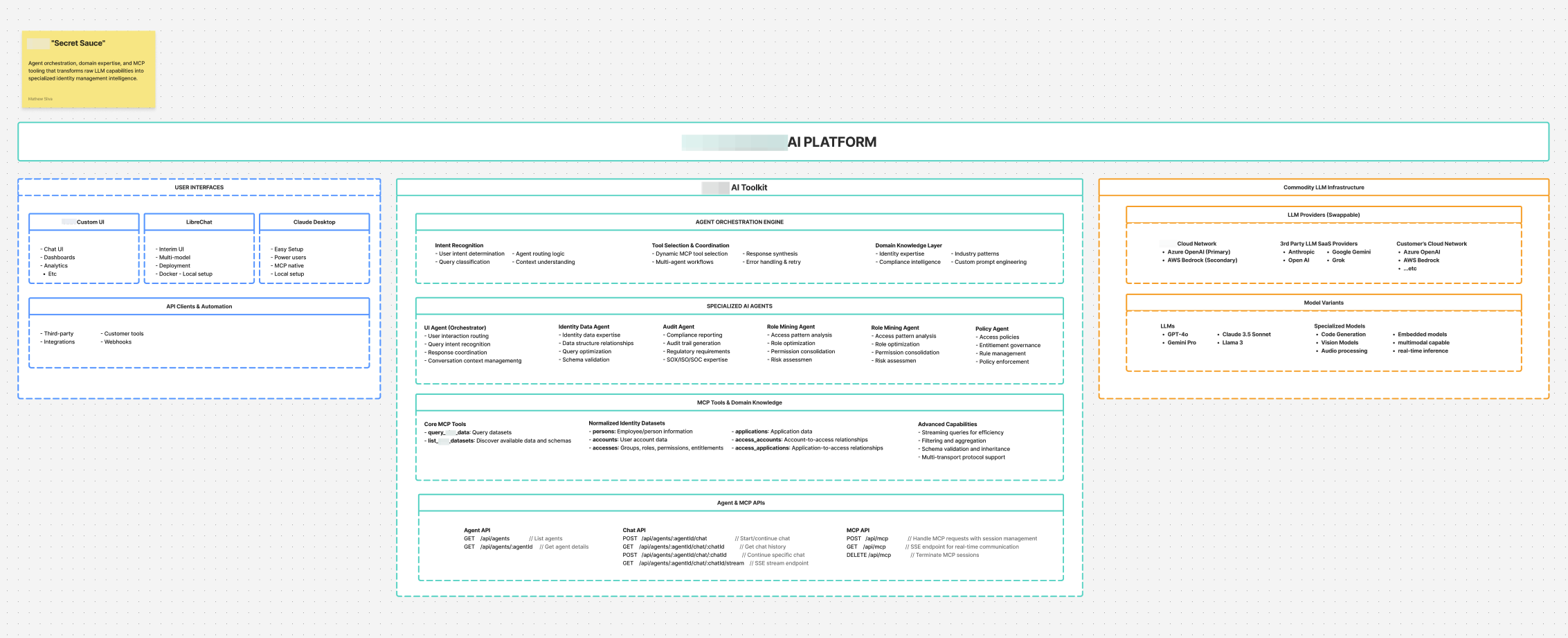

AI Platform Future: Strategic Direction from Validated Prototype

The successful prototype validation created a clear foundation for strategic planning and resource allocation. With proven customer demand and technical feasibility, the focus shifted to scaling the validated concept into a comprehensive AI-powered identity platform that could capture the market opportunity we had identified.

Current AI Platform Development

Several initiatives were launched based on the prototype validation:

MCP Remote Server Integration (Epic ENG-1609): After reviewing a technical design document I had put together, the engineering team committed to integrating the prototype MCP server into our production infrastructure with a target of end-of-June SaaS release. This integration would enable multi-tenant support and enable the ability for our early adopter customers to install and use it in their existing AI tooling and workflows.

Future AI Platform Design

AI Platform Architecture Design: Beyond the basic MCP server, I began designing a comprehensive AI agent system that could support specialized agents for different identity management use cases. This architecture would enable more sophisticated AI interactions while maintaining the simplicity that made the prototype compelling. Below are the visualizations I put together to communicate this with the team.

My Strategic Recommendations

We should prioritize a self-service identity AI platform as the fastest and most capital-efficient path to product-market fit for an AI-Native solution. Customers have already validated both need and readiness: each has existing AI workflows, strong executive reporting pain, and compatible infrastructure. A SaaS onboarding flow with an initial focus on AI-powered executive reporting enables us to deliver measurable value in under 30 days while supporting growth into more advanced workflows like normalization and role mining.

This approach lets early adopters prove out value independently while our services team ensures their success. It also delivers the SaaS engagement signals VCs expect, without the delays and friction of traditional enterprise deployment cycles. We already have the architecture, customer interest, and implementation plan. We just need to execute.

Conclusion

This 0-to-1 product development journey demonstrated that even under extreme organizational constraints, methodical product discovery combined with rapid prototyping can validate new market directions and establish technical foundations for growth. The proof of concept built and validated represents more than just a successful prototype, it validates a strategic direction that positions t to lead an emerging AI-native identity management market.

A Recap of Key Themes

- End-to-end ownership of the product lifecycle, from initial discovery to validated prototype and production handoff

- Methodical approach to product design using an adapted Google Ventures Design Sprint

- Rapid prototyping under extreme constraints while maintaining technical rigor and security standards

- Customer-driven development grounded in direct validation calls with enterprise stakeholders

- Strategic technical decisions that balanced performance, scalability, and feasibility

- Cross-functional collaboration across engineering, services, and leadership despite resource constraints

- Clear articulation of product-market fit through AI prototype testing and adoption

- Early architectural planning to support future roadmap and multi-tenant SaaS delivery

- Practical demonstration that AI-native identity analysis is not just viable but urgently needed by potential customers